Are you tired of struggling to keep up with logging compliance regulations? Look no further!

In this blog post, we are going to unlock the full potential of M2131 logging compliance using the powerful combination of Azure Data Explorer and Azure Data Lake Gen 2.

Discover how these game-changing tools can revolutionize your logging processes, streamline data management, and ensure that your organization stays in line with industry standards. Get ready to dive into a world where compliance becomes effortless and unlocking possibilities becomes second nature!

Introduction to M2131 Logging Compliance

M2131 Logging Compliance is a crucial aspect of data governance in organizations. It refers to the process of recording and tracking all activities related to data access, manipulation, and management. These logs are essential for compliance with regulatory requirements, identifying security breaches, and monitoring data usage.

In today's fast-paced digital world, businesses are generating vast amounts of data every day. With the rise of cloud computing and remote work, the need for comprehensive logging compliance has become more critical than ever before. This is where M2131 Logging Compliance comes into play.

At its core, KQL is a proprietary language used in Microsoft technologies such as Azure Data Explorer (ADX) and Azure Data Lake Gen 2 (ADL). It allows users to query structured or semi-structured data stored in various formats within these platforms. The use of KQL enables efficient extraction, transformation, and loading (ETL) processes for organizations working with large datasets.

The primary purpose of M2131 Logging Compliance is to provide a unified approach to tracking all user activities within environment(s). This means that any interactions with data – from querying to loading – can be logged and monitored.

Moreover, ADX and ADL offers an added layer of security by enabling granular control over user permissions at the database level. This means that only authorized users have access to specific datasets within the system, reducing the risk of unauthorized data access or tampering.

Another significant benefit of M2131 Logging Compliance is its ability to help organizations meet regulatory requirements. Many industries, such as healthcare and finance, have strict regulations governing data access and management. With M2131, organizations can easily provide audit logs that demonstrate compliance with these regulations.

In conclusion, implementing M2131 Logging Compliance is essential for organizations seeking to maintain data governance and compliance. This comprehensive tool not only allows for seamless tracking of user activities but also provides an additional layer of security and ensures adherence to regulatory requirements. By adopting M2131 logging compliance practices, organizations can effectively manage their data while minimizing the risks associated with data breaches.

Understanding the Requirements for M2131 Logging

M2131 logging is a compliance requirement that has become increasingly important in today's digital landscape. With the rise of data breaches and privacy concerns, organizations are under immense pressure to ensure that their log data is properly managed and secured.

But what exactly are the requirements for M2131 logging? In this section, we will delve deeper into the specifics of this compliance standard and explore how Azure Data Explorer (ADX) and Azure Data Lake Gen 2 can help you meet these requirements effectively.

Firstly, let's define what M2131 logging is. M2131, also known as M-21-31, is a memorandum issued by the U.S. Office of Management and Budget (OMB) in 2021. This memorandum, titled "Improving the Federal Government’s Investigative and Remediation Capabilities Related to Cybersecurity Incidents," is part of the federal government's response to increasing cybersecurity threats, particularly in the wake of significant incidents like the SolarWinds breach. M-21-31 provides detailed guidance for federal agencies to enhance their visibility and response capabilities before, during, and after a cybersecurity incident.

The memorandum was issued in support of Executive Order 14028, "Improving the Nation's Cybersecurity," signed by President Biden in May 2021. This executive order aims to bolster cybersecurity across federal civilian agencies and the private sector, focusing on areas such as sharing threat information, securing software development processes, and establishing standard operating procedures for responding to security incidents.

M-21-31 introduces a maturity model for event log management across federal agencies, categorized into four event logging (EL) tiers, ranging from EL0 (Not Effective) to EL3 (Advanced). These tiers define the requirements for log management, such as the types of logs to be collected, data details to be included in the logs, log retention standards, and security measures for log information. For instance, the basic requirements (EL1) include details like timestamp, event type, device ID, IP addresses, and user information in the logs, while the advanced requirements (EL3) encompass automated response playbooks and integration of security tools.

Federal agencies are responsible for assessing their current maturity levels against this model and addressing any gaps to meet the specified requirements within set timelines. They are also encouraged to share relevant logs with cybersecurity authorities like the Cybersecurity and Infrastructure Security Agency (CISA) and the FBI to help protect federal information systems and manage security risks.

In addition to having an audit trail, organizations must also ensure that their log data remains tamper-proof and easily accessible for at least one year. This ensures that any suspicious activity can be traced back and investigated promptly.

So how can ADX and Azure Data Lake Gen 2 help with meeting these requirements?

Azure Data Explorer offers advanced log analytics capabilities with its real-time streaming ingestion engine.

The Benefits of Using Azure Data Explorer and Azure Data Lake Gen 2 for Logging Compliance

In today's digital landscape, data is everywhere and constantly being generated at a rapid pace. As technology advances, businesses are faced with the challenge of managing and securing this vast amount of data while also ensuring compliance with regulatory requirements. This is where Azure Data Explorer (ADX) and Azure Data Lake Gen 2 (ADL Gen 2) come in.

Azure Data Explorer is a fully managed data analytics service that enables you to query large volumes of data in near real-time. It is designed for analyzing high-velocity streaming data from sources such as applications, devices, and sensors. ADX utilizes a columnar database architecture which makes it highly efficient for querying large datasets quickly.

On the other hand, Azure Data Lake Gen 2 is an enterprise-scale cloud storage solution that allows you to store both structured and unstructured data at any scale. With ADL Gen 2, you can store your raw log files without needing to worry about schema or format. This gives you the flexibility to store all types of logs in one place, making it easier for compliance purposes.

Now let's explore how using these two powerful tools together can bring significant benefits when it comes to logging compliance:

- Centralized Storage: One of the biggest challenges businesses face when it comes to logging compliance is managing multiple log sources scattered across different systems. With ADL Gen 2, you can consolidate all your logs into one centralized location regardless of their format or source. This not only simplifies the process but also ensures integrity and consistency of your logs.

- Near-Real-Time Querying: Compliance regulations often require businesses to have the ability to quickly access and query their log data. ADX enables near-real-time querying, which means you can get insights from your logs as they are being generated. This is especially useful in cases where immediate action needs to be taken based on log data.

- Advanced Analytics: ADX provides powerful analytics capabilities that enable you to extract valuable insights from your log data. You can perform complex queries, create custom dashboards, and even build machine learning models on top of your log data. This not only helps with compliance but also allows you to gain deeper insights into your business operations.

- Scalability: Both ADX and ADL Gen 2 are highly scalable solutions, meaning they can handle large volumes of data without any significant performance impact. This is crucial when dealing with compliance since regulations may require businesses to retain log data for a certain period of time.

- Security: Data security is a critical aspect of logging compliance. With both ADX and ADL Gen 2, you have the option to enable encryption at rest for added security. Additionally, access control policies can be applied at the storage level, ensuring only authorized users have access to your log data.

In conclusion, the combination of Azure Data Explorer and Azure Data Lake Gen 2 provides a powerful solution for logging compliance. Together, they offer centralized storage, near-real-time querying, advanced analytics, scalability, and security, making it easier for businesses to meet regulatory requirements while also gaining valuable insights from their log data.

How to Set Up Azure Data Explorer and Azure Data Lake Gen 2 for M2131 Logging

In this section, we will guide you through the step-by-step process of setting up Azure Data Explorer and Azure Data Lake Gen 2 for M2131 logging compliance. This is an essential part of unlocking the full potential of M logging compliance, as it allows for efficient storage and analysis of M logs.

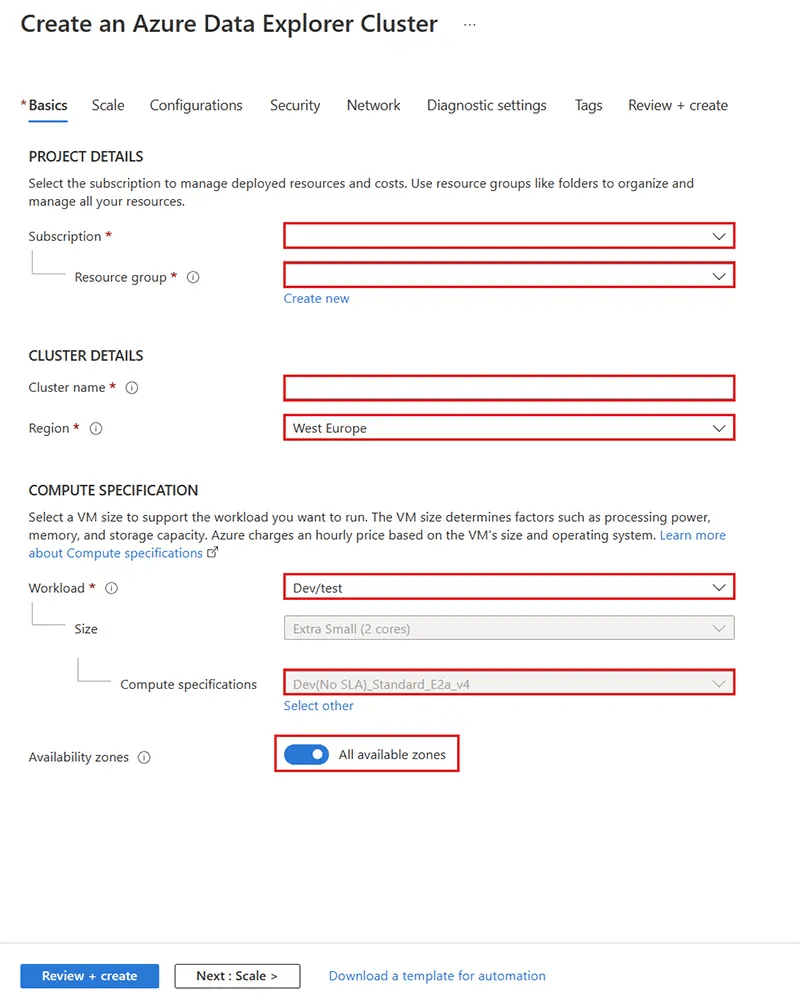

Step 1: Create an Azure Data Explorer Cluster:

The first step is to create an Azure Data Explorer cluster in your Azure portal. This can be done by navigating to the 'Create a resource' tab, selecting 'Azure Data Explorer', and clicking the “Create” button. Choose the desired subscription, resource group, and region for your cluster. Give your cluster a meaningful name and select the appropriate pricing tier. Once a cluster is created you will need to create a Database as well. This can be done by clicking on the “Databases” tab in the cluster and then clicking the “+ Add Database” button.

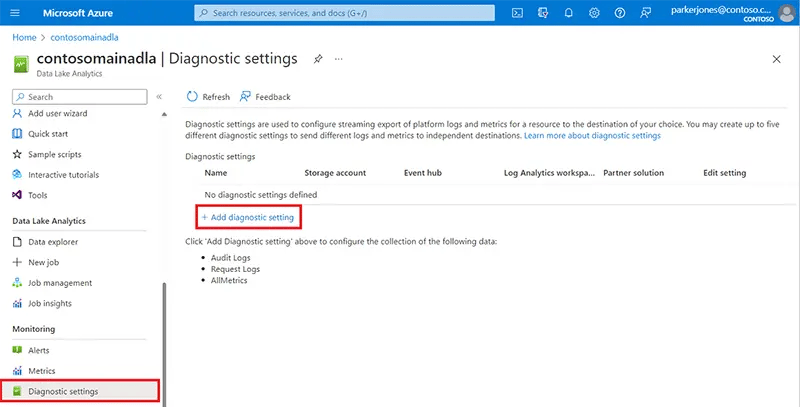

Step 2: Enable Diagnostic Settings:

Next, you will need to enable diagnostic settings on your data lake gen 2 storage account. This can be done by navigating to the storage account's settings page and clicking on 'Diagnostic Settings'. Click on '+ Add diagnostic setting', give it a name, select all available log categories (Blob reads/writes/deletes), and choose 'Send to Log Analytics' under destination details. Select the previously configured Log Analytics workspace and click 'Save'.

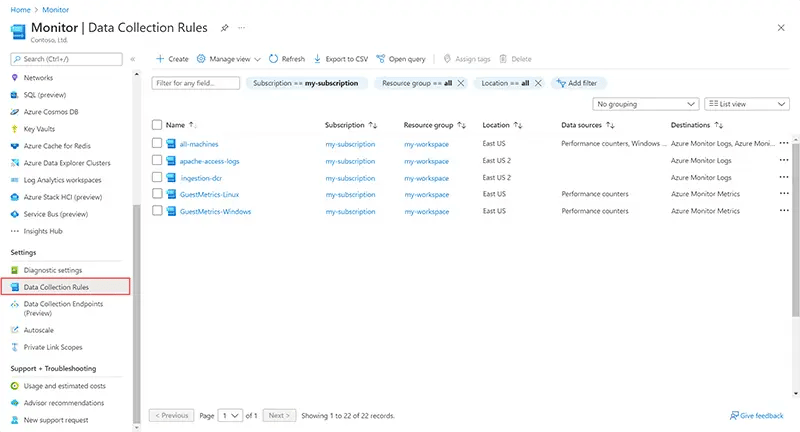

Step 3: Configure Log Collection Rule:

In order to collect the M logs from your data lake gen 2 storage account, you will need to configure a log collection rule. This can be done by going to your Log Analytics workspace's settings page and clicking on 'Advanced Settings'. Under 'Data', click on 'Data Management' and then 'Log Collection Rules'. Click on '+ Add', give the rule a name, select the data source as your data lake gen 2 storage account, and choose the appropriate time frame. Under filter pattern, enter '_ResourceId contains '/mlogs/'' (without quotes). Click 'Save'.

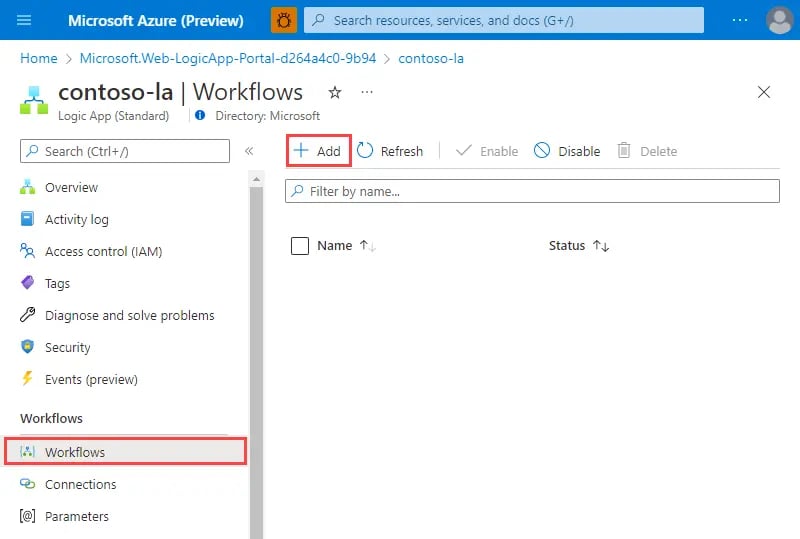

Step 4: Create a Logic App to copy data to ADX:

Once M logs are collected in Log Analytics, you must create a connection between the Log Analytic Workspace and Azure Data Explorer. There are several ways to do this, but since the M logs are a custom log, we will need to Create a Logic App. Create a logic app within the Azure portal, this logic app acts as a bridge between different services and enables automated workflows. Once the logic app is set up, define a workflow that precisely outlines how often you want to retrieve and add data from log analytics into ADX. By specifying the desired frequency of execution with a recurrence trigger, you ensure real-time or periodic updates according to your specific requirements. After creating your recurrence trigger, create an Azure Data Explorer “Run async control command” action. You can use the “.set-or-append" command to create a new table or append data to an existing table in ADX.

Step 5: Verify Data Ingestion:

To verify that your M logs are being ingested into Azure Data Explorer, go back to your cluster's settings page and click on 'Data Explorer'. Enter the following query in the query window:

M_CL where TimeGenerated > ago(1h)

This will show you all the M logs that have been ingested in the last hour.

Congratulations! You have now successfully set up Azure Data Explorer and Azure Data Lake Gen 2 for M2131 logging compliance. Your M logs will now be efficiently stored and can be easily analyzed using Azure Data Explorer's powerful querying capabilities.

Best Practices for Managing and Analyzing Logs with Azure Data Explorer and Azure Data Lake Gen 2

Managing and analyzing logs is an essential task for organizations looking to maintain compliance and ensure data security. With the rise of cloud computing, managing large volumes of log data has become increasingly challenging. However, with the help of powerful tools like Azure Data Explorer (ADX) and Azure Data Lake Gen 2, this task can be simplified.

In this section, we will discuss the best practices for managing and analyzing logs using ADX and Azure Data Lake Gen 2.

- Set up a Centralized Log Management System

The first step in effective log management is to set up a centralized log management system. This involves collecting all the logs from different sources into a single repository. With ADX, you can easily ingest data from various sources such as web servers, databases, applications, and IoT devices into a central location.

Azure Data Lake Gen 2 acts as a scalable storage solution for your log data, allowing you to store massive amounts of structured or unstructured data at low cost. This centralized approach makes it easier to manage logs from multiple sources while providing faster access to critical information when needed. - Define Appropriate Retention Policies

Another important aspect of managing logs is defining appropriate retention policies. As per industry regulations or compliance requirements, certain types of logs may need to be retained for specific periods before being deleted. In ADX, you can define retention policies based on time or size limits to delete the data automatically.

With Azure Data Lake Gen 2, you can also set up lifecycle management policies to move data from hot storage to cool or archive storage tiers based on your retention requirements. This helps in reducing storage costs while ensuring compliance with retention policies. - Use ADX Clusters for Large-Scale Log Analytics

ADX is a powerful log analytics platform that can handle large volumes of data and perform complex queries at high speeds. It offers a distributed architecture that allows you to scale up or down as needed, making it an ideal solution for processing and analyzing logs at scale.

By creating clusters in ADX, you can distribute the workload across multiple nodes, enabling faster data processing and analysis. Additionally, with ADX's auto-scaling feature, your cluster can automatically adjust its size based on the volume of data being ingested and queried. - Take Advantage of ADX Query Performance Tuning

When dealing with large volumes of log data, query performance becomes critical. ADX offers various features to improve query performance, such as columnstore indexing, materialized views, and compression.

Columnstore indexing is an efficient way to store and retrieve large amounts of data quickly. Materialized views allow you to pre-compute results for frequently used queries

Case Study: Real-Life Example of M2131 Logging Compliance with Azure Data Explorer and Azure

Introduction:

In today's digital landscape, data is constantly being generated and collected at an unprecedented rate. With the increasing importance of data privacy and security, organizations are required to comply with various regulatory standards and mandates such as HIPAA, GDPR, and SOX. This has made logging compliance a crucial aspect for businesses to ensure the protection of sensitive data.

Azure Data Explorer (ADX) and Azure Data Lake Gen 2 (ADL) are two powerful tools that can help organizations achieve compliance with ease. In this section, we will explore a real-life case study of how Everwell Health Services successfully utilized ADX and ADL to enhance their logging compliance process.

Background:

Everwell is a leading healthcare organization that handles large volumes of sensitive patient data on a daily basis. As per industry regulations, they were required to maintain detailed logs of all their systems, applications, and user activities in order to demonstrate compliance with HIPAA and GDPR standards.

Challenges Faced:

Prior to implementing ADX and ADL, Everwell's logging process was manual and relied heavily on traditional log files which proved to be time-consuming and error-prone. The lack of centralized storage also made it difficult for them to quickly access specific logs when needed.

Another major challenge faced by Everwell was managing the massive volume of incoming data generated from multiple sources including servers, applications, databases, network devices etc. This posed a significant burden on their existing infrastructure resulting in slow query performance and increased costs for storage.

Solution:

To address their logging compliance challenges, Everwell decided to leverage the power of Azure Data Explorer and Azure Data Lake Gen 2. They implemented a centralized data analytics platform that could handle large volumes of data while providing real-time access to logs for analysis.

Azure Data Explorer was used to ingest and store all their log data in a centralized location. Its ability to process massive amounts of structured and unstructured data in real-time enabled Everwell to streamline their logging process. With ADX, they were able to quickly query and analyze logs from various sources without any performance degradation.

To further enhance their logging compliance process, Everwell used Azure Data Lake Gen 2 to store raw unprocessed log files. This helped them maintain an audit trail of all changes made to the logs over time, providing a complete view of all activities.

Results:

By leveraging ADX and ADL, Everwell was able to overcome their logging compliance challenges and achieve the following results:

- Centralized Storage: All log data was consolidated into a single location making it easier for Everwell's IT team to manage and maintain.

- Real-Time Access: Azure Data Explorer's ability to process real-time queries enabled Everwell's IT team to quickly access specific log data for analysis, enhancing their incident response time.

- Cost Savings: The use of Azure Data Explorer and Azure Data Lake Gen 2 helped Everwell reduce infrastructure costs as they no longer needed to maintain multiple servers for log storage and processing.

- Compliance: With centralized storage and real-time access to logs, Everwell was able to easily demonstrate compliance with HIPAA and GDPR regulations during audits.

Conclusion: The implementation of Azure Data Explorer and Azure Data Lake Gen 2 has greatly improved Everwell's logging compliance process. They are now better equipped to handle large volumes of data while ensuring the security and privacy of sensitive information. As a result, they can focus on providing quality healthcare services to their patients without worrying about compliance issues.